As the SEMIA project reaches its final stage, we will be releasing a few more posts in which we report on what we did, and learnt, over the course of the past two years.

In the first two items to appear, members of the University of Amsterdam team present some reflections on what developments in the field of computer vision can do for access to moving image collections. To begin with, we discuss the different ways of approaching such collections, and report on how we tweaked existing methods for feature extraction and analysis in order to offer users a more explorative alternative to search (currently still the dominant access principle). Next, we delve a little more deeply into the forms of classification this involves, the issues they raise, and how they impacted on our process. In a third post, the Amsterdam University of Applied Sciences team discuss their experience of building an interface on top of the functionality for image analysis that our computer scientist developed. And in a final one, we introduce four SEMIA-commissioned projects in which visual and sound artists use the data we generated in the feature analysis process, to devise (much more experimental) alternatives for engaging audiences with the archival collections we sampled for our purpose.

In this first item, we expand on our project objectives (as introduced on the About page on this site) elaborating on our vision for an explorative approach to collection access, and discuss the implications for feature extraction and analysis.

~

As we previously explained, our purpose in the SEMIA project was to find out how users might access moving images in digital form, not by searching for specific items (and in hopes of fulfilling a given need, in research or reuse) but in a more ‘explorative’ way, inspired in the process by the visual appearance of the films or programmes in a collection. To this end, we adapted methods and tools developed in the field of computer vision. This allowed us to extract sensory information from discrete objects in our database, combine it, and thus establish relations between said objects (a process we explain in a little more detail in our following post). Subsequently, we visualised the relations that emerged and built an interface around them. (For the more technically inclined: one should imagine this step as converting high-dimensional representations into a 2D representation while preserving the closeness or distance between the object features. The role of representation, likewise, will be touched upon in our next post.) In extracting image information, we focused on colour, shape, texture (or in computer vision terms, ‘visual complexity’ or ‘visual clutter’) and movement (or ‘optical flow’) in the fragments in our purpose-produced repository.

In the past few decades, access to the collections of (institutional) archives, whether analogue or digital, has tended to rely on written information, often in the form of item-level catalogue descriptions. Increasingly, such information is no longer stored in separate databases, but integrated into collection management systems that also contain digitised versions of related still images and/or the films or programmes themselves. In addition, records are more often part of larger networks, in which they can be cross-referenced (see e.g. Thorsen and Pattuelli 2016). But as a rule, patrons still need to approach the works they are looking for through associated descriptions, which are necessarily verbal. To this end, they need to search a system by formulating a query, using terminology that aligns with that used in the database.

Elsewhere on this site, we already touched upon some of the issues with this principle of access-by-search. On the one hand, it forces users to approach collections from the perspective of those who identified and described them, and on the basis of their prior interpretations. As it happens, the categories that moving image catalogues work with, and that inform such efforts, tend to be quite restrictive in terms of the interpretive frameworks they adhere to. Oftentimes, they cluster around productional data, plot elements, standardised labels for the activities, people or places featured (using set keywords and proper names) and/or genre identifiers (cf. Fairbairn, Pimpinelli and Ross 2016, pp. 5-6). In contrast, the visual or aural qualities of the described items – after all, difficult to capture verbally – tend to remain undocumented.[1] For historic moving images, this is problematic, as they are often valued not only for the information they hold but also for their look and ‘feel’. On the other hand, search infrastructures also operate on the built-in assumption that users are always looking for something specific. And moreover, that they can put into words what it is they are looking for – in the form of a query. Over the years, research on the topic has actually provided a great deal of evidence to the contrary (see e.g. Whitelaw 2015).

A query, in the words of literary scholar and digital humanist Julia Flanders (2014), is a “protocol or algorithm invoking […] content via a uniform set of parameters from an already known set of available data feeds or fields” (172). The phrase ‘already known’, in this sentence, is key, as it draws attention to the fact that database searchers need to be familiar from the outset with the (kinds of) datasets a database holds, in order to be able to use them to their advantage. In other words, search interfaces are more about locating things, than about finding out what the repositories they interface to, might contain. For this reason, designer, artist and interface scholar Mitchell Whitelaw (2015) calls them “ungenerous”: they withhold information or examples, rather than offering them up in abundance. Arguably, this restricts users in terms of what they may encounter, which kinds of relations between collection or database objects they can establish, and which kinds of insights about them they can gain.

The most common (and practical) alternative to searching collections is browsing them (Whitelaw 2015). Although definitions of this term diverge, authors tend to agree that it entails an “iterative process” of “scanning […] or glimpsing […] a field of potential resources, and selecting or sampling items for further investigation and evaluation” (ibid.). Browsing, in any case, is more open-ended than searching; arguably, one can even do it without a specific goal in mind. For Whitelaw, practices of browsing therefore challenge instrumentalist understandings of human information behaviour in terms of an “input-output exchange” – understandings that are quite common still in such disciplines as information retrieval (ibid.). Others before him have argued that because of this open-endedness, they also foster creativity (e.g. O’Connor 1988, 203-205) – a key objective, indeed, of the SEMIA project. In an upcoming article, we propose that this is due at least in part to the potential it offers for serendipitous discovery: for sudden revelations that occur as a result of unexpected encounters with (specific combinations of) data – or as in our case, items in a collection or database (Masson and Olesen forthcoming).

A connection made serendipitously: still from media artist Geert Mul’s Match of the Day (2004-ongoing) [click the image to view a fragment]

To enable browsing, Whitelaw (2015) argues, we need to “do more to represent the scale and richness of [a] collection”, and at the same time offer multiple inroads into it. We can do this for instance by devising new ways for users to establish relations between objects. Flanders, building on a suggestion by Alan Liu, proposes that in thinking about the constitution of digital collections, we adopt a ‘patchwork’ rather than a ‘network’ model. This invites us to view them not as aggregations of items with connections between them that “are there to be discovered”, but as assemblages of things previously unrelated but somehow still “commensurable” – in the sense that they can be mutually accommodated in some way (2014, 172). If we do this, a collection becomes “an activity” constantly performed, “rather than a self-evident thing or a set” (ibid.). Within the SEMIA context, we act in the assumption that the sensory features of collection items can serve as a means (or focal point) for making them ‘commensurable’. The basis for commensurability, here, could be likeness, but potentially also its opposite – because in exploring images on the basis of their visual features, the less similar or the hardly similar may also be productive. Arguably, the relations this suggests complement those that traditional archival categories already point to, and thereby allow us, in Whitelaw’s words, to present more of a collection’s richness.

In recent years, archival institutions have experimented with the use of various artificial intelligence tools to enrich their collection metadata, such as technologies for text and speech transcription.[2] No doubt, such initiatives are already contributing towards efforts to open up their holdings to a range of users. But when it comes to broadening the variety of possible ‘entry points’ to collections, visual analysis methods are especially promising. First, because they may help compensate for the almost complete lack of information, in the current catalogues and collection management systems, on the visual characteristics of archival objects. At the very least, extracting visual information and making it productive as a means for exploring a database’s contents may allow for previously unrecoverable items (such as poorly metadated ones) to become approachable or retrievable – and in the process, very simply, ‘visible’. A second, related reason is that such increased visibility may in turn entail that objects are interpreted in novel ways. Specifically, it can alert users to the potential significance of (perhaps previously unnoticed) sensory aspects of moving images, thus reorienting their interpretations along previously ignored axes of meaning. Interesting in this context is that, as we rely on visual analysis, we may not have to trust the system to also settle on what exactly those aspects, or the connections between them, might represent, or even ‘mean’. In principle, then, visualisation of our analyses might also offer an alternative to traditional catalogue entries that verbally identify, and thereby (minimally) interpret, presumably meaningful entities.

However, exploiting this potential does require significant effort, in the sense that one cannot simply rely on existing methods or tools, or even build on all of their underlying principles. As it happens, much research in the field of computer vision is also focused on identification tasks, and specifically, on automating the categorisation of objects. (We discuss this point further in our next post.) Visual features such as colour, shape, movement and texture are quite abstract – unlike the sorts of categories that catalogues, but also image recognition applications, tend to work with. For this reason, using them as the starting point for a database exploration entailed that our computer scientist had to tweak existing analysis methods. But in addition, it also meant that the team needed to deviate from its original intent to rely primarily on deep learning techniques, and specifically on the use of so-called ‘artificial neural networks’ (or neural nets), which learn their own classification rules based on the analysis of lots of data.

The first of these measures was basically that in the feature extraction process (which we also discuss in a little more detail in a subsequent post), we stopped short of placing the images in our database in specific semantic classes. In the field of computer vision, a conceptual distinction is often made between image features at different ‘levels’ of analysis. Considered from one perspective, those levels concern the complexity of the features that are extracted. They range from descriptions of smaller units (such as pixels in discrete images) to larger spatial segments (for instance sections of images, or entire images) – with the former also serving as building blocks for the latter (the more complex ones). But from another, complementary perspective, the distinction can also be understood as a sliding scale from more ‘syntactic’ to more ‘semantic’ features. For purposes of object identification, semantic ones are more useful, as they represent combinations of features that amount to more or less ‘recognisable’ entities. For this reason, computer vision architectures such as neural nets are trained for optimal performance at the highest level of analysis.[3] In SEMIA, however, it is precisely the more ‘syntactic’ (or abstract) features that are of interest.

So, our computer scientist, while inevitably having to work with a net trained for analysing images at the highest semantic level (specifically, ResNet-101, a Convolutional Neural Network or CNN), chose to scrape information rather at a slightly lower one (also a lower ‘layer’ in the network itself): there where it generally contains descriptions of sections of the objects featured in them. At this level, the net ‘recognises’ shapes, but without relating them to the objects they are supposedly part of (so that they can be identified).[4] Arguably, this allows users more freedom in determining which connections between images – made on the basis of their shared, or contrasting, features – are relevant, and how. (In our upcoming article, we consider this assumption more closely, and also critique it; see Masson and Olesen forthcoming.) Hopefully, this also makes for a more intuitive exploration, less restrained by conventional, because semantically significant, patterns in large aggregations of moving images.

The second measure we took to ensure a sufficient level of abstractness in the relations we sought to establish, was to not rely exclusively on methods for machine learning (and specifically, deep learning; see here for the difference). For some of the features we were concerned with, we reverted instead to the use of task-specific algorithms. As we previously explained (and will discuss further in our next post), early methods for computer vision used to involve the design of algorithms that could execute specific analysis tasks, and were developed to extract given sets of features. More recently, the field has focused more radically on the design of systems that enable automatic feature learning. Such systems are not instructed on which information to extract, but instead, at the training stage, make inferences on the topic by comparing large amounts of data. This way, they infer their own decision rules (see also our upcoming post). As we explained, training, here, is geared very much towards identification; that is, analysis at the semantic level. For this reason, we decided to only use the neural net to extract information about shape (or rather: what we, humans, perceive as such) – the area where it performed best as we scraped lower-level, ‘abstract’, information. To isolate colour and texture information, we reverted to other methods: respectively, the construction of colour histograms (in CIELAB colour space [5]) and the use of a Subband Entropy measure (see Rosenholtz, Li, and Nakano 2007). Movement information was extracted with the help of an algorithm for measuring optical flow.[6] This way, we could keep features apart, so as to ultimately make them productive as parameters for browsing.

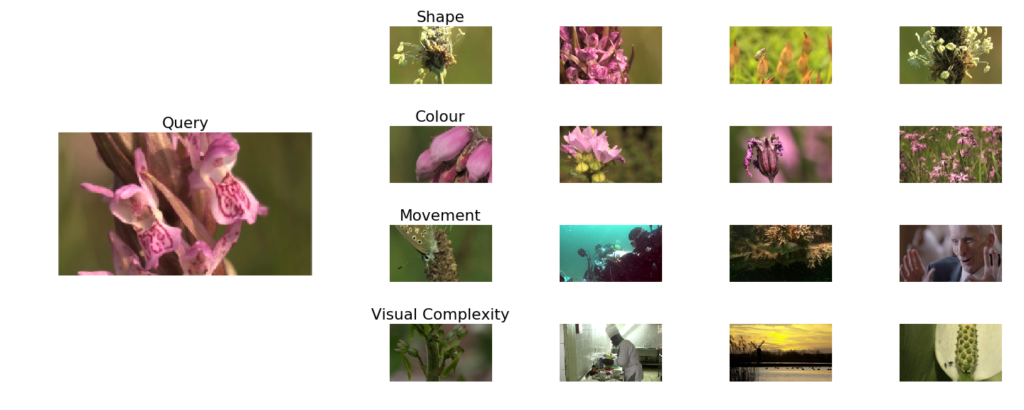

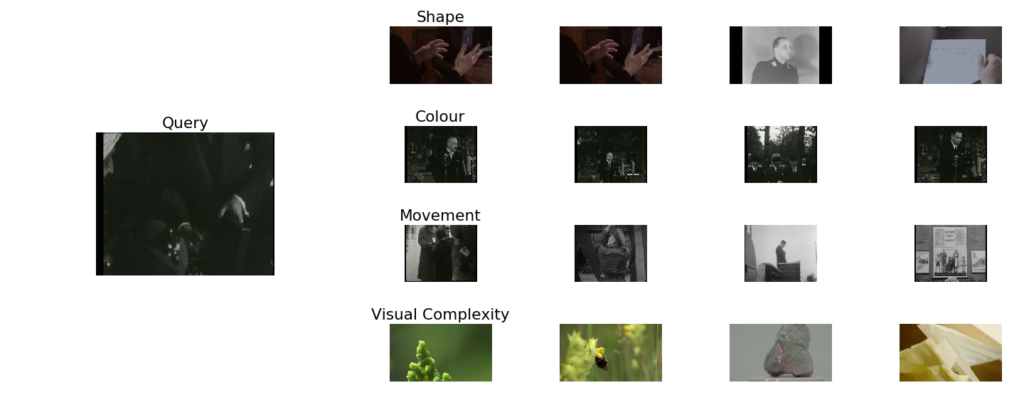

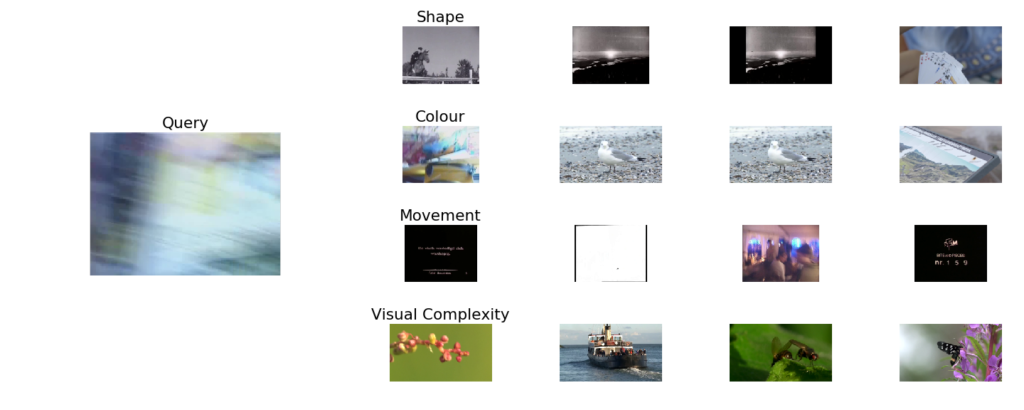

Query shots from the SEMIA database, along with four of their ‘nearest neighbours’ (closest visual matches) in the shape, colour, movement, and visual complexity feature spaces [provided by project member Nanne van Noord]

Yet as we learnt, even if feature extraction is done with a minimum of human intervention in the labelling of images (and image sections), we can never truly cancel out the detection of semantic relations altogether. This is hardly surprising, as it is precisely the relations between low-level feature representations and objects that have long since been exploited to detect semantic relations and objects – even in very early work on computer vision. (Our computer scientist tends to illustrate the underlying logic or intent as follows: oranges are round and orange, so by detecting round and orange objects, we can find oranges.) Therefore, some feature combinations are simply too distinctive not to be detected with our chosen approach – even if we do our best to block the algorithms’ semantic ‘impulse’ (as the first and second image cluster above make clear).[7] At the same time, our examples show that the analysis of query images also highlights visual relations that initially seem more ‘illegible’, and therefore, invite further exploration. In this sense, our working method does yield surprising results or unexpected variations. (Such as, the connection, in the first series above, between the movement of an orchid’s petals in the wind, and those of a man presumably gesticulating while speaking.)

In the course of the project, our efforts have been geared at all times towards stimulating users to explore those less obvious connections. This way, we hope not only to significantly expand the range of metadata available for archival moving images, but also to allow for a revaluation of their sensory dimensions – also in the very early (‘explorative’) stages of research and reuse.

– Eef Masson

Notes

[1] Oftentimes, archival metadata do specify whether films or programmes are in black and white or colour, or sound or silent, and they may even name the specific colour or sound systems used. But they generally do not provide any other item-specific information about visual or aural features.

[2] For example, the Netherlands Institute for Sound and Vision, our project partner, has recently been exploring the affordances of automated speech recognition.

[3] Many thanks to Matthias Zeppelzauer (St. Poelten University of Applied Sciences) for helping us gain a better understanding of these conceptual distinctions.

[4] For more on how neural nets specifically ‘understand’ images, see also Olah, Mordvintsev, and Schubert 2017.

[5] The histograms further consisted of 16 bins for the description of each colour dimension (resulting in a feature representation of 4096 dimensions).

[6] Movement information was described by means of a histogram of the optical flow patterns. Angle and magnitude were separately binned for each cell in a three by three grid of non-overlapping spatial regions (an approach akin to the HOFM one described in Colque et al. 2017). The procedure for colour, texture and movement information extraction was always applied to five (evenly spaced) frames per fragment (shot) in our database.

[7] Exact matches rarely occur, because for the purposes of the project, the detection settings were tweaked in such a way that matches between images from the same videos were ruled out. (Therefore, only duplicate videos in the database can generate such results.)

References

Colque, Rensso V.H.M., Carlos Caetano, Matheus T.L. De Andrade, and William R. Schwartz. 2017. “Histograms of Optical Flow Orientation and Magnitude and Entropy to Detect Anomalous Events in Videos.” IEEE Transactions on Circuits and Systems for Video Technology 27, no. 3: 673-82. Doi: 10.1109/TCSVT.2016.2637778.

Fairbairn, Natasha, Maria Assunta Pimpinelli, and Thelma Ross. 2016. “The FIAF Moving Image Cataloguing Manual” (unpublished manuscript). Brussels: FIAF.

Flanders, Julia. 2014. “Rethinking Collections.” In Advancing Digital Humanities: Research, Methods, Theories, ed. by Paul Longley Arthur and Katherine Bode, 163-174. Houndmills: Palgrave Macmillan.

Masson, Eef, and Christian Gosvig Olesen. Forthcoming [2020]. “Digital Access as Archival Reconstitution: Algorithmic Sampling, Visualization, and the Production of Meaning in Large Moving Image Repositories.” Signata: Annales des sémiotiques/Annals of Semiotics 11.

O’Connor, Brian C. 1988. “Fostering Creativity: Enhancing the Browsing Environment.” International Journal of Information Management 8, no. 3: 203-210. Doi: 10.1016/0268-4012(88)90063-1.

Olah, Chris, Alexander Mordvintsev, and Ludwig Schubert. 2017. “Feature Visualization: How neural networks build up their understanding of images.” Distill 2, no. 11. Doi: 10.23915/distill.00007.

Rosenholtz, Ruth, Yuanzhen Li, and Lisa Nakano. 2007. “Measuring Visual Clutter.” Journal of Vision 7, no. 17. Doi: 10.1167/7.2.17.

Thorsen, Hilary K., and M. Christina Pattuelli. “Linked Open Data and the Cultural Heritage Landscape”. In Linked Data for Cultural Heritage, ed. by Ed Jones and Michele Seikel, 1-22. Chicago: ALA Editions.

Whitelaw, Mitchell. 2015. “Generous Interfaces for Digital Cultural Collections.” Digital Humanities Quarterly 9, no. 1.